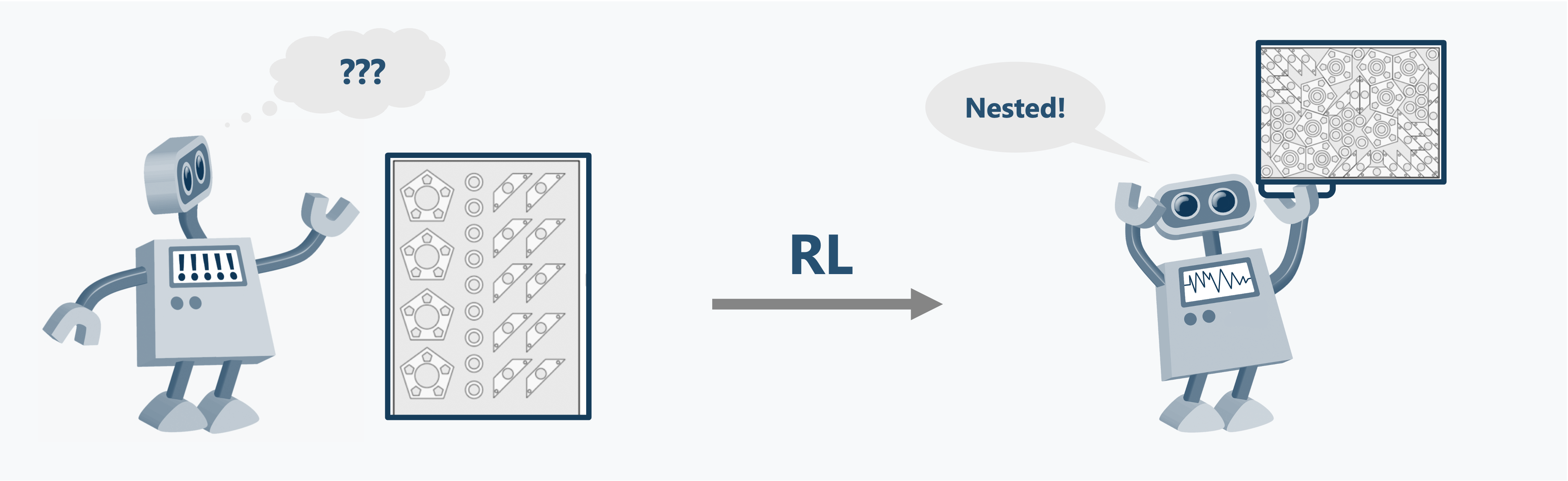

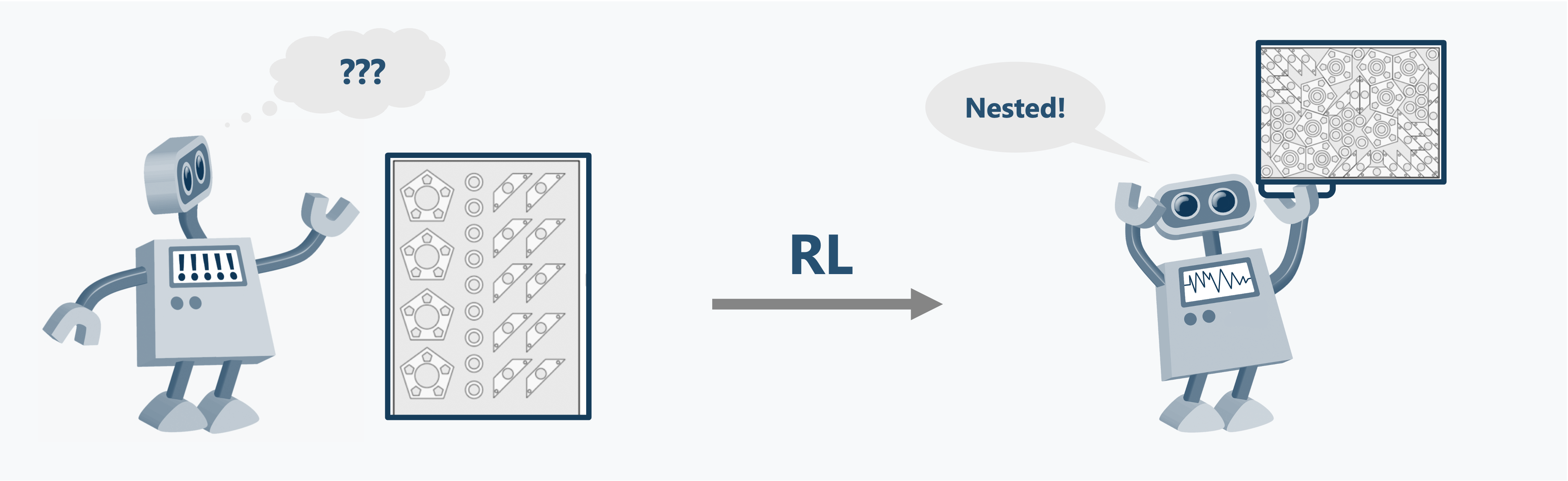

Learning Nesting with Deep Reinforcement Learning

In manufacturing, nesting refers to the process of laying out irregular shaped patterns while optimizing for a certain constraint. As a result, nesting problems in industrial settings are primarily concerned with finding the optimal layout of parts to minimize both raw material waste and processing time. However, traditional nesting algorithms are time-inefficient, making it difficult to maintain operational efficiency.

By increasing computational efficiency, Reinforcement Learning (RL) can learn tasks where exhaustive search methods are infeasible, and is increasingly being used to outperform traditional methods in a variety of manufacturing disciplines. However, RL algorithms that seek to solve nesting or related packing problems are either time-inefficient, only applicable to simpler rectangle packing cases, or include constraints that make them unsuitable industrial application.

To address the time efficiency challenges of traditional and RL-based methods, I developed an RL algorithm that achieves performance comparable to nesting algorithms while being faster than state-of-the-art nesting software and search heuristics using the same computing hardware. At the same time, it eliminates major limitations of previously developed methods and demonstrates generalization capabilities by maintaining its performance on unseen shapes within its learned domain.

More details: Learning to Nest Irregular Two-Dimensional Parts Using Deep Reinforcement Learning.

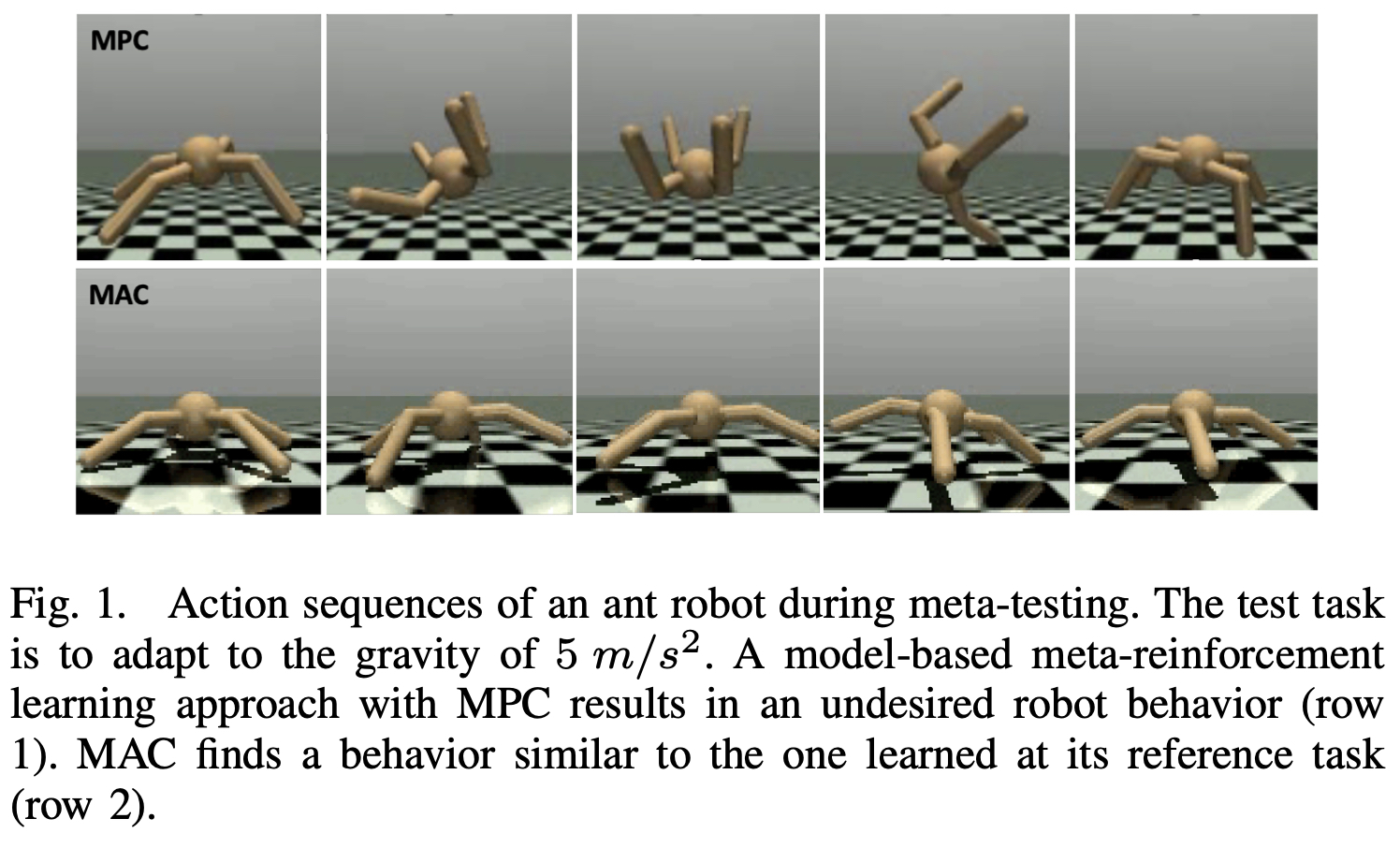

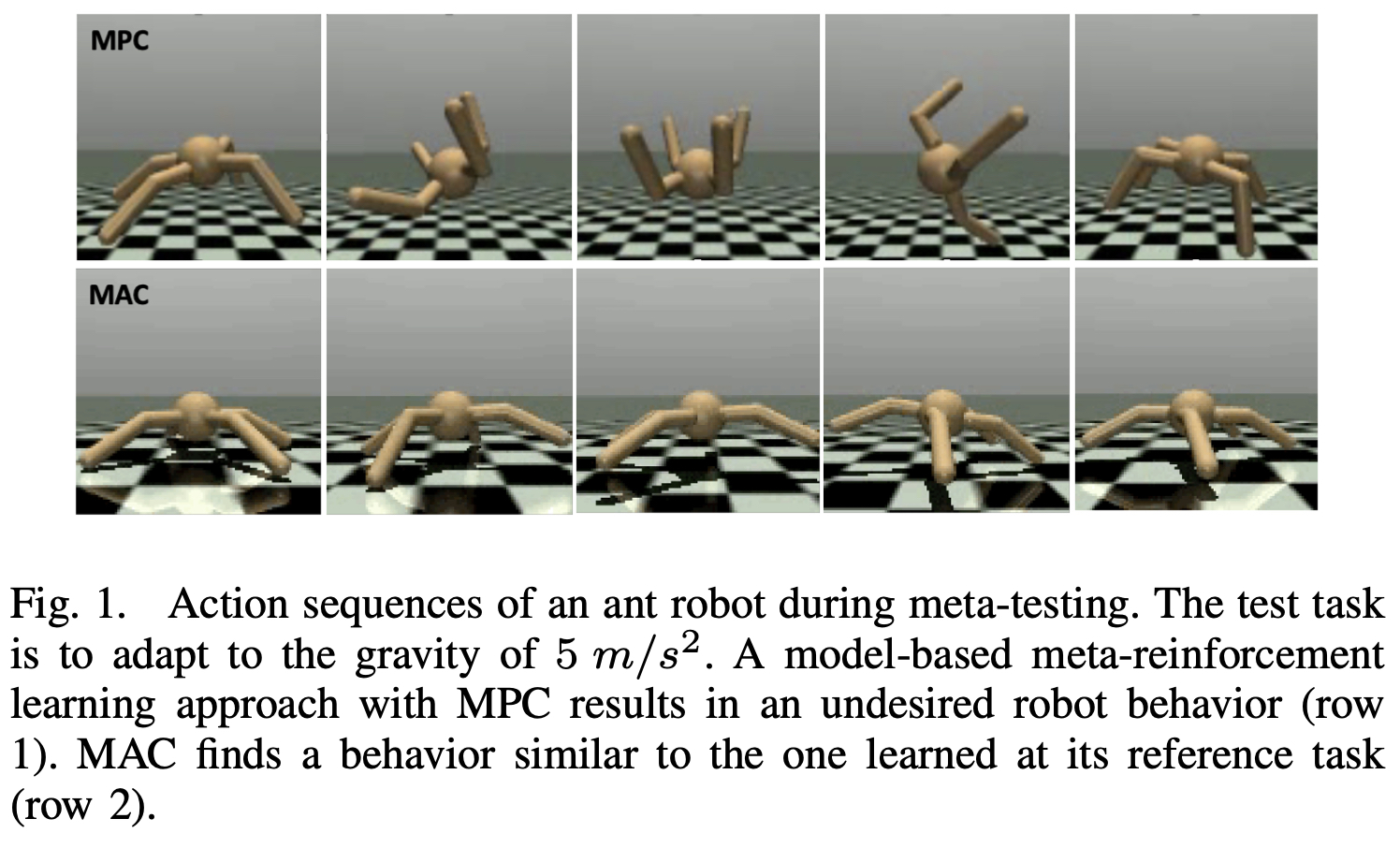

Meta Learning using World Models and Planning

In machine learning, meta-learning methods aim for fast adaptability to unknown tasks using prior knowledge. Model-based meta-reinforcement learning combines planning via world models with meta learning to apply this adaptive behavior to agents. However, adaption to unknown tasks does not always result in preferable agent behavior.

In this reasearch we introduced a controller that allows to apply a preferred robot behavior from one task to many similar tasks. To do this, it aims to find actions an agent has to take in a new task in order to reach a similar outcome as in a learned task. As a result, it alleviates the need to construct a reward function that enforces preferred behavior.

More details: Robotic Control Using Model Based Meta Adaption.